This "Quantum Leap Beginner Guides" installment focuses on entanglement, a fundamental quantum property allowing intrinsic connection between quantum particles. This linkage enables a powerful computing capacity for quantum computers, and despite challenges like the fragility of qubits, significant progress continues. Entanglement also enhances the precision and speed of quantum sensors, offering transformative impacts across various fields, including defense, healthcare, and aerospace.

The Quantum Leap’s Beginner Guide to “Superposition”

Superposition describes a quantum system’s ability to be in multiple states at the same time until it is measured. We can use superposition to impart additional information and features on qubits, which enables quantum computers to do things that classical computers cannot.

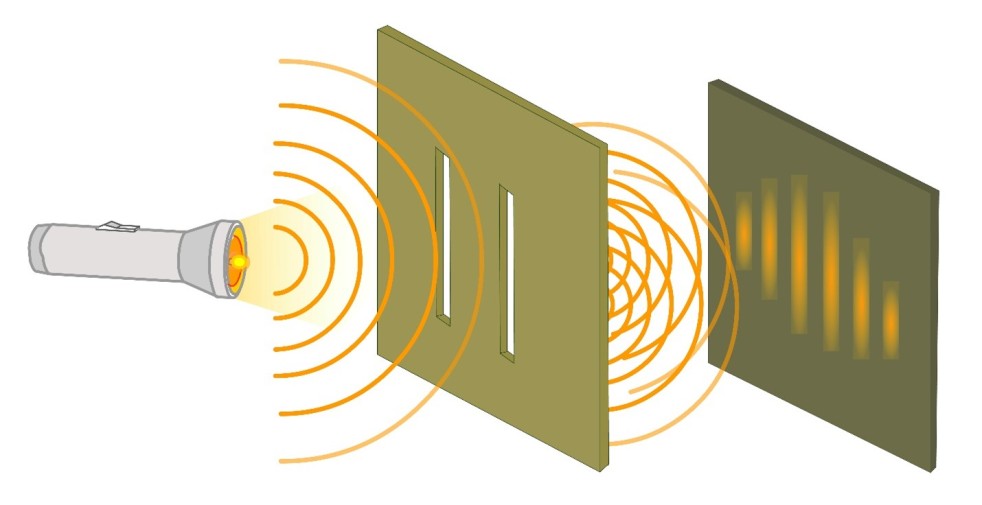

The Quantum Leap’s Beginner Guide to “Wave-Particle Duality”

Quantum objects (e.g., atoms, photons, electrons) exhibit both “particle” behaviors and “wave” behaviors. You don’t need to understand the physics behind this non-intuitive duality to appreciate that we are now beginning to take advantage of it and use it effects to begin doing some amazing things like create ultra-precise sensors and massively powerful quantum computers.

The Quantum Leap’s Beginner Guide to “Qubits”

As we learn to tame the quantum properties of elementary particles, we are finding that profound new things can be done including the creation of ultra-powerful sensors and computers.

The Quantum Leap’s Beginner Guide to “Quantum”

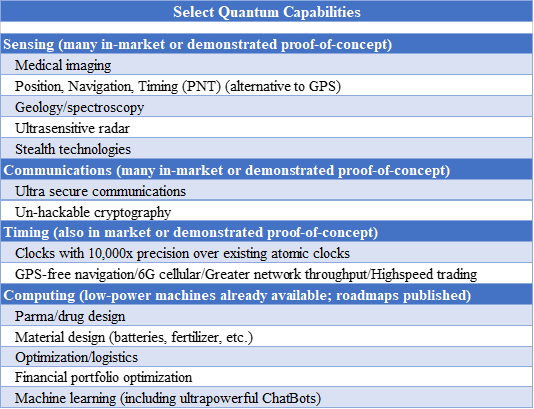

There are immense new capabilities in a variety of industries, where quantum can provide game-changing new powers. Stay tuned to the Quantum Leap for continued coverage about this emerging and vital technology, hopefully in a language all readers can understand.

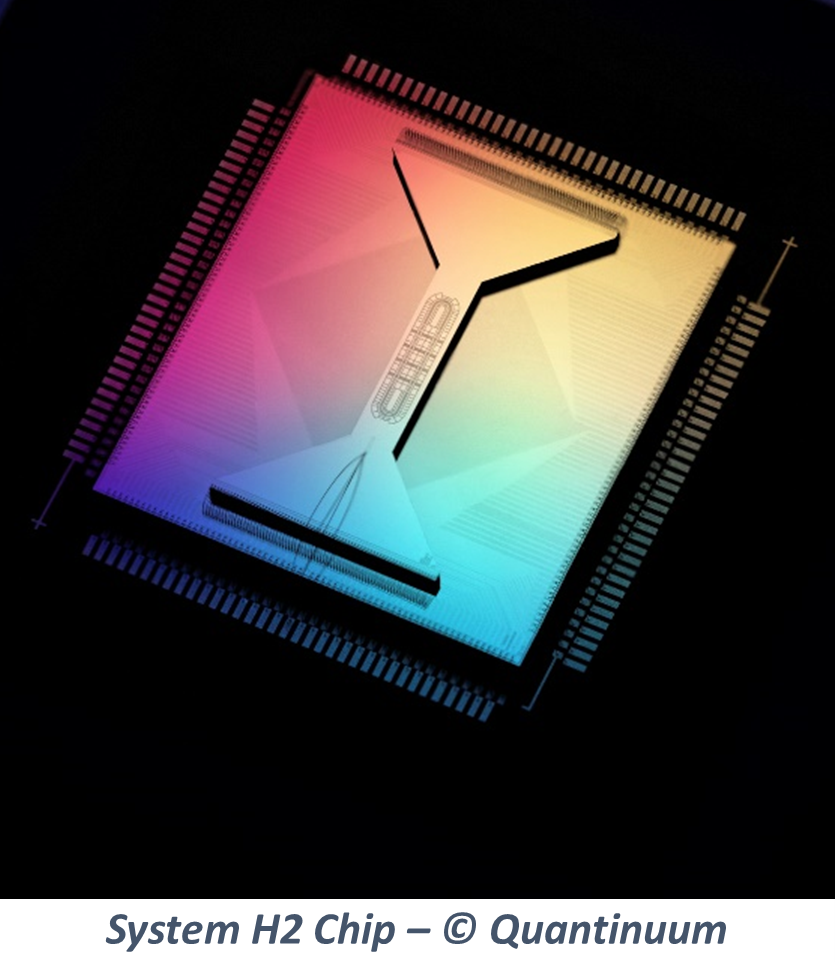

Quantinuum – Company Update and System Model H2 Release

Quantinuum announces the release of its System Model H2 32-qubit quantum computer, among other impressive achivements.

The Denver-Boulder Quantum Ecosystem

The quantum Denver-Boulder quantum ecosystem is vibrant, dynamic and growing.

What [Would/Should/Could] We Ask a Quantum Computer?

What do you think the new “killer app” will be on a Quantum Computer? What question would you like a QC to answer? I’d love to hear your ideas.

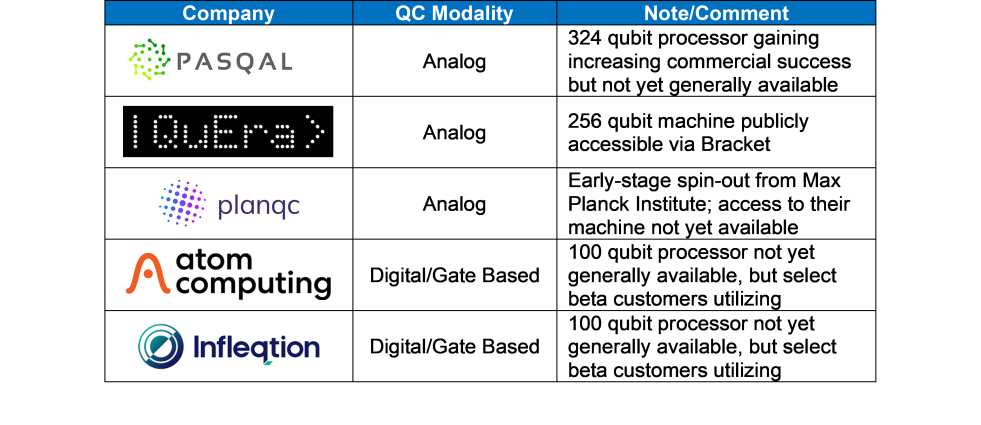

Quantum Computing with Neutral Atoms

Why the recent surge in jaw-dropping announcements by neutral atom players? Why are neutral atoms seeming to leapfrog other qubit modalities? Read the latest Quantum Leap blog post to find out.

Does Anybody Really Know What Time It Is?

If we can now readily obtain the “official” time by syncing our cell phone with GPS satellites or our computer with an atomic clock with accuracy to within one second per 60 million years, why do we need to measure time more accurately than that? Keep reading and I hope you’ll understand.